Novelty

The initial fascination and enthusiasm for a new

technology or an innovation that does not yet have the needed evidence to

support its adoption.

Novelty bias poses a likely occupational hazard due to

our reliance upon new information technology. A total of 28% of the MLA leaders

voted for the frequency of their observing others engaged in Novelty bias. Many

of our non-HIP colleagues have come to expect us to engage with new technology

as unofficial institutional early adapters [41]. New information technology

often involves complex relationships with vendors wanting to make large sales

so these decisions can be expensive for an institution. Studies have

illustrated how positive early reports on new innovations often are countered

or at least tempered by subsequent added studies or by more rigorous studies

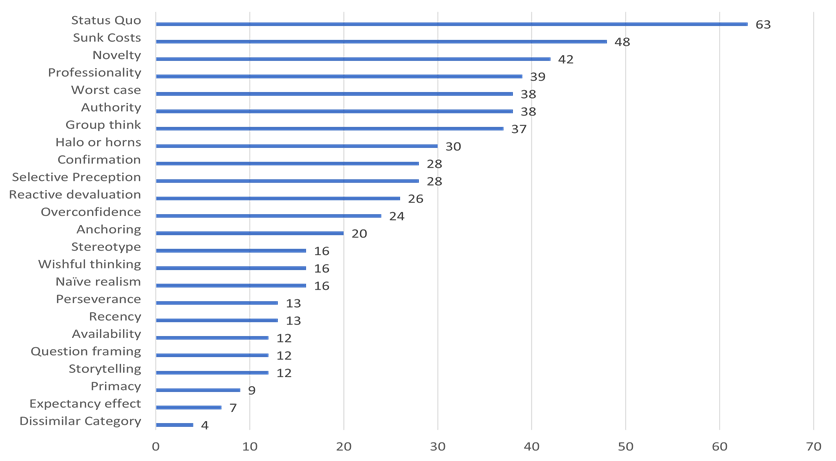

[42-43]. The top three ranked forms of cognitive bias among HIPs, thus far,

probably reflect a larger societal tension between the need to innovate with

confronting the practicalities of conserving resources and maintaining

efficient operations.

Professionology

Viewing a situation through the shared perceptions of

one’s profession rather than by taking a broader perspective. Sometimes known

as “Professional Deformation.”

Professionology might be the oldest forms of cognitive

bias recognized by the social sciences, although it has not been extensively

studied since its initial identification in 1915. From the outset, it was seen

as a distortion that people undergo in the process of their socialization into

a specific profession. It derived, in part, from a sense of “exaggerated

importance” [Page 31] attached to one’s professional roles [44]. Military

professionals were portrayed in this study as epitomizing “professional deformation”

(as it was once known). Physicians, attorneys, social workers, teachers,

nurses, and members of the clergy also were susceptible to Professionology [44].

The implicit sense of a separate if not superior identity seems to reinforce a

sense of Professionology in most or possibly all professions [45-55].

Professionology represents a form of the broader and more studied cognitive

bias of Ingroup-Outgroup bias [56-60].

The present study revealed that 26% of the MLA leaders

identified Professionology as a common form of cognitive bias within our

profession. While not much has been researched explicitly on mitigating the

bias of Professionology, some limited research has been conducted upon reducing

Ingroup Outgroup Bias. One mitigation strategy involves prompting regular

interactions between members of the two groups. HIPs have a natural avenue to

reduce their Professionology due to their potential for frequent interactions

with other health professionals. Framing the two or more groups as members of a

broader group can reduce the insularity of any one subgroup within the larger

group. Third, encouraging opportunities for friendships or collaborations among

members of different groups also might reduce Professionology [61-63]. Explicit

efforts to re-classify groups with different categorizations might reduce

Ingroup Outgroup Bias [64]. Encouraging members of groups to attempt to be more

empathetic toward members of other groups also might help [65-66]. Fostering

deeper individual relationships among members of different groups was one

promising approach to reducing intergroup bias[67]. One team of researchers has

explored the use of ‘science curiosity’ as a mitigating strategy for reducing

intergroup perceptions. They define science curiosity as an open-minded

willingness to engage with surprising information that runs counter to their

own attitudes [68-69].

Authority

Deferring to an expert or other authority figure

disproportionate to the extent of their expertise; or, the range of their

authority on the subject.

Most of us work in hierarchal organizations with clear

lines of responsibility for making decisions [70]. This hierarchal context

might explain the high ranking in this study of this form of cognitive bias.

The practical, ethical, and sometimes legal issues related to abuses of

authority are well-known [71-74]. While extreme abuses of authority might lead

to authoritarianism [75], more often an authority figure’s extension beyond

one’s range expertise leads to annoyance among those lower in the hierarchy; it

also can lead to less efficiency of the organization. While difficult to

counter Authority bias, several studies [76-78] have suggested strategies to

mitigate as summarized in Table 3.

Worst-Case Scenario

Emphasizing or exaggerating those possible negative

outcomes disproportionate to all possible outcomes.

Worst-Case Scenario bias was a surprise finding in this

study, as it rarely rises to this high a ranking with other surveyed

professional populations. The present study produced a 25.5% frequency of

mention by HIP leaders. Worst-Case Scenario might be thought of as an extreme

form of pessimism [79]. Worst-Case Scenario might represent an historical

artifact [80] within this study, prompted by lingering psychological trauma in

the US population brought on by the worldwide Covid-19 Pandemic.

Worst-Case Scenario bears a close connection to other

similar forms of cognitive bias such as patient Catastrophizing [81-83] And

Negativity Bias. [84-87]. The close relationship of the Worst-Case Scenario to

Catastrophizing and particularly its to Negativity Effect might lend clues to

its mitigation. Table 3 offers mitigation strategies to Worst Case

Scenario bias based on prior research [88-90]. Two studies have cautioned

against an absolute rejection of Worst Case Scenario bias due to the

possibility that pessimists might have a more realistic view of the situation

than others in the group [91].

Group Think

Believing in the autonomy of a group, stereotyping of

those outside the group, self-censoring, censoring of dissenters, maintaining

the illusion of unanimity, and enforcing a group “consensus” viewpoint.

The present study leveraged the tendency for people to be

able to spot cognitive biases in others. Those same cognitive biases are not at

all obvious to those observed colleagues. One of the most-often mentioned

antidotes to many cognitive biases relies upon the wisdom of the group to

detect flaws in individual decision-making processes. Groups are a great way to

generate ideas and to spot individual limitations in reasoning that leads to a

decision. Singh and Brinster refer to this evolutionary advantage in humans as

‘shared intentionality’ (Page 118) in collective action [92].

What happens, though, when the group itself becomes the

source of cognitive bias? Group Think was first recognized over 50 years ago

when groups of highly intelligent, well-educated US Government officials who

were making high-stakes foreign policy decisions succumbed instead to taking

dangerous risks [93]. Group Think has been studied in a variety of settings

since these early exploratory works. Some of the identified antecedent

conditions to Group Think include particular leadership styles, rigid group processes,

and certain behaviors [94]. Other factors increasing the likelihood of Group Think

include individuals closely aligning their individual identities to the group,

attraction to the group itself, and group cohesion. Friendships within a group

might exert a mild counterbalance to Group Think [95]. Others seem to have

found less supporting evidence for group cohesiveness or certain leadership

styles as drivers of Group Think [96]. Several techniques to counter Group Think

summarized in Table 3 have demonstrated some success [97-99]. Group

processes oftentimes do not exhibit Group Think. Contexts involving complex

variables, emotional competencies, and human relations can generate group

processes that definitely can outperform individual efforts [100].

General Mitigation Strategies

For purposes of efficiency, it might be fruitful to

identify general strategies to mitigate our human tendencies to be swayed by

all or most of our cognitive biases when making decisions. General mitigation

strategies presently are not well-developed and lack sufficient evidence to be

much help [101-102]. A few studies offer clues as to how to generally proceed

to avoid cognitive biases. Etzioni offers the blunt advice that decision makers

should “assume that whatever decisions they render—especially first ones—are

wrong and will have to be revised, most likely several times” [103]. Similarly,

counterfactual reasoning, the practice of considering one is wrong in a study

of 34 nursing students appeared to offset cognitive bias tendencies [104]. Nearly

300 management graduate students reduced their cognitive biases through

counterfactual reasoning, provided that these participants were not

overconfident of their knowledge of the subject. [105]. One study involved

offering a number of plausible outcomes to a decision, rather than just the

opposite of what was predicted, to lower cognitive bias scores [106]. Asking

decision makers to justify their decisions tended to aid self-reflection to

slow any slide into cognitive biases [107]. Skill in scientific reasoning and

training in statistics have been found to deter cognitive biases [108-109]. One

neuroscientist has suggested that we use a socially-supported environment to

make more abstract yet more rational choices more viscerally tangible [110].

Intergroup Comparisons

It would be interesting to replicate this study involving

MLA leaders in several years to compare results. This constellation of

cognitive biases resembles and differs from other groups that have taken

similar cognitive bias surveys administered by the first author. A seminar of

local business leaders in 2008 ranked the following cognitive biases highly:

Halo or Horns Effect; Group Think; Anchoring; and, Expectancy Effect. In recent

years the first author’s second-year medical students have consistently ranked

highest Group Think, Confirmation Bias, Authority, and Anchoring forms of

cognitive bias.

In recent months the first author has enlisted public

health and medical colleagues to replicate this study in their respective

professions. Replications could also take place within single HIP workplaces or

in different related organizations other than MLA. It would be exciting to use

quasi-experimental or randomized controlled trial research designs to test the

effectiveness of the aforementioned mitigation strategies.

Limitations

Analyzing the representativeness of actual participants

in the survey in comparison to the contacted baseline population tends to

validate these kinds of surveys. In reference to the peer review process above,

these experts will assess representativeness of the participants. For example,

if survey respondents only hail from two certain geographic regions of the US,

this limitation possibly will modulate the validity of the survey results. Or,

as another example, if one type of library is overrepresented, that, too, could

modify the interpretation of the results.

On May 15, one participant noticed that the initial list

presented to participants did not include the term Group Think. Part 2 of the

survey, however, included the term Group Think with its definition in this

voting phase. This omission was fixed within 15 minutes at 10am on May 15th

by the REDCap Administrator. This omission seems unlikely to have made even a

marginal difference given the fact that it did appear with a definition when

participants voted.

There are two foreseen deliverables from this study.

First, HIPs will benefit in their daily decision-making roles by recognizing

the most commonly-encountered forms of cognitive biases. Second, EBP is a

framework employed by professionals for making informed decisions. Other than

the study in 2007, there are no studies on cognitive biases in decision making

contexts for HIPs so this will fill a gap in the research evidence base.

Funding

This project was funded by a Seed Grant from University

of New Mexico Health Sciences Library and Informatics Center. Statistical

services were aided by a grant from the National Center for Advancing

Translational Sciences, National Institutes of Health under grant number

UL1TR001449.

Acknowledgements

The authors thank colleagues Marie T. Ascher, Heather

Holmes, Karen M. Heskett, and Margaret Hoogland for compiling the email

addresses of the MLA leaders used in this project, which they compiled for

the 2024 MLA Research Agenda. [20] Volunteers who reviewed the content of the

survey instrument or tested the functionality of the online survey platform

included: Deirdre Caparoso; Martha Earl; Leah Everitt, Robyn Gleasner, Laura

Hall; Ingrid Hendrix; George Hernandez; Rachel Howarth; Danielle

Maurici-Pollock; Abbie Olivas; Melissa Rethlefsen; Deborah Rhue; and Talicia

Tarver. On May 7, 2024, several colleagues volunteered to pilot the actual

REDCap survey, mostly for functionality of the interface. Special kudos to

Danielle Maurici-Pollock for subjecting the survey in REDCap to an acid test

during the first online pilot and for re-testing it two more times as it became

ready for launching. Matthew Manicke served ably as the REDCap administrator

who aided the first author in implementing the survey.

Appendix

The following supplementary resources can be found via a

single link: https://digitalrepository.unm.edu/hslic-publications-papers/98/

·

Supplementary Table One: 2007 Cognitive Bias Summary

·

Supplementary Table Two: Participation by MLA Leader Categories

·

Detailed Methods Description

Data Availability Statement

The full data set for this anonymous survey are available

from the Data Appendix at: https://digitalrepository.unm.edu/hslic-publications-papers/97/.

REFERENCES

1. Booth

A. Barriers and facilitators to evidence-based library and information

practice: an international perspective. Perspectives in International

Librarianship 2011; 1: 1-15.

2. Clancy

CM, Cronin K. Evidence-based decision making: global evidence, local decisions.

Health Aff (Millwood). 2005;24(1):151-162. doi:10.1377/hlthaff.24.1.151

3.

Eldredge JD. Evidence Based Practice: A Decision-Making Guide for

Health Information Professionals. Peer Reviewed, Open Access. Albuquerque,

New Mexico: University of New Mexico Health Sciences Library and Informatics

Center, 2024. ISBN 979-8-218-34249-4. Available from:

<https://www.ncbi.nlm.nih.gov/books/NBK603117/>. Doi: 10.25844/0PWE-9H68

4. Sadik

MA. The mystery decisions leaders make: Why do leaders make strange decisions

when it comes to people? HR Future. 2023;(10):62-64. Accessed April 19,

2024.

https://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=172538846&site=ehost-live&scope=site

5. Lee

Y. Evolutionary psychology theory: can I ever let go of my past? In:

Appel-Meulenbroek R, Danivska V. A Handbook of Theories on Designing

Alignment between People and the Office Environment. (Appel-Meulenbroek R,

Danivska V, eds.). Routledge/Taylor & Francis Group; 2021.

doi:10.1201/9781003128830

6. Andrews

PW. The psychology of social chess and the evolution of attribution mechanisms:

Explaining the fundamental attribution error. Evolution and Human Behavior.

2001;22(1):11-29. doi:10.1016/S1090-5138(00)00059-3

7. Haselton

MG, Nettle D, Andrews PW. The Evolution of Cognitive Bias. In: Buss DM, ed. The

Handbook of Evolutionary Psychology. John Wiley & Sons, Inc.;

2005:724-746.

8. https://doi.org/10.1002/9780470939376.ch25

9. Over

D. Rationality and the normative description distinction. Koehler DJ Ed. Blackwell

Handbook of Judgment and Decision Making. John Wiley & Sons,

Incorporated; 2007: 1-18. Accessed March 18, 2025.

https://public.ebookcentral.proquest.com/choice/PublicFullRecord.aspx?p=7103359

10. Dang

J. Is there an alternative explanation to the evolutionary account for

financial and prosocial biases in favor of attractive individuals? Behav

Brain Sci. 2017;40:e25. doi:10.1017/S0140525X16000467

11. Lee

Y. Behavioral economic theory: masters of deviations, irrationalities, and

biases. In: Appel-Meulenbroek R, Danivska V. A Handbook of Theories on

Designing Alignment between People and the Office Environment.

(Appel-Meulenbroek R, Danivska V, eds.). Routledge/Taylor & Francis Group;

2021. doi:10.1201/9781003128830

12. Blankenburg

Holm D, Drogendijk R, Haq H ul. An attention-based view on managing information

processing channels in organizations. Scandinavian Journal of Management.

2020;36(2). doi:10.1016/j.scaman.2020.101106

13. Leung

BTK. Limited cognitive ability and selective information processing. Games

and Economic Behavior. 2020;120:345-369. doi:10.1016/j.geb.2020.01.005

14. Baron

J. Thinking and Deciding. Cambridge University Press, 1988: 259-61.

15. Plous

S. The Psychology of Judgment and Decision Making. McGraw-Hill, 1993.

16. Pronin

E, Lin DY, Ross L. The bias blind spot: Perceptions of bias in self versus

others. Personality and Social Psychology Bulletin. 2002;28(3):369-381.

doi:10.1177/0146167202286008

17. Pronin

E, Hazel L. Humans’ bias blind spot and its societal significance. Current

Directions in Psychological Science. 2023;32(5):402-409.

doi:10.1177/09637214231178745

18. Pronin

E, Gilovich T, Ross L. Objectivity in the Eye of the Beholder: Divergent

Perceptions of Bias in Self Versus Others. Psychological Review.

2004;111(3):781-799. doi:10.1037/0033-295X.111.3.781

19. Eldredge

JD. Cognitive biases as obstacles to effective decision making. Presentation.

Fourth International Evidence Based Library and Information Practice Conference

(EBLIP4). Durham, NC. 2007.

20. Helliwell

M. Reflections of a Practitioner in an Evidence Based World: 4th

International Evidence Based Library & Information Practice Conference. Evidence

Based Library and Information Practice, 2(2), 120–122.

https://doi.org/10.18438/B8P88R.

21. Asher

M, Hoogland M, Heskett K, Holmes H, Eldredge JD. Making an impact: the new 2024

Medical Library Association Research Agenda, J Med Libr Assoc 2025 Jan; 113(1):

24-30.

22. Baron

J. Thinking and Deciding. Cambridge University Press, 1988: 259-61.

23. Plous

S. The Psychology of Judgment and Decision Making. McGraw-Hill, 1993.

24. Moore

DW. Measuring new types of question effects: additive and subtractive. Public

Opinion Quarterly 2002; 66: 80-91.

25. Carp

FM. Position effects on interview responses. Journal of Gerontology 1974; 29

(5): 581-87.

26. Bostrom

N, Ord T. The Reversal Test: Eliminating Status Quo Bias in Applied Ethics*. Ethics.

2006;116(4):656-679. doi:10.1086/505233

27. Samuelson

W, Zeckhauser R. Status quo bias in decision making. Journal of Risk and

Uncertainty. 1988;1(1):7-59. doi:10.1007/BF00055564

28. Anderson

CJ. The Psychology of Doing Nothing: Forms of Decision Avoidance Result from

Reason and Emotion. Psychological Bulletin. 2003;129:139-167. https://doi.org/10.1037/0033-2909.129.1.139

29. Hosli

MO. Power, Connected Coalitions, and Efficiency: Challenges to the Council of

the European Union. International Political Science Review / Revue

internationale de science politique. 1999;20(4):371-391. 10.1177_019251219902000404.pdf

30. Geletkanycz

MA, Black SS. Bound by the past? Experience-based effects on commitment to the

strategic status quo. Journal of Management. 2001; 27(1):3-21.

doi:10.1016/S0149-2063(00)00084-2

31. Camilleri

AR, Sah S. Amplification of the status quo bias among physicians making medical

decisions. Applied Cognitive Psychology. 2021;35(6):1374-1386.

doi:10.1002/acp.3868

32. Silver

WS, Mitchell TR. The status quo tendency in decision making. Organizational

Dynamics. 1990; 18(4):34-46. doi:10.1016/0090-2616(90)90055-T

33. Oschinsky

FM, Stelter A, Niehaves B. Cognitive biases in the digital age - How resolving

the status quo bias enables public-sector employees to overcome restraint. Government

Information Quarterly. 38(4). doi:10.1016/j.giq.2021.101611

34. Ford

JD, Ford LW. Stop Blaming Resistance to Change and Start Using It. Organizational

Dynamics. 2010;39(1):24-36. doi:10.1016/j.orgdyn.2009.10.002

35. Malek

N., Acchiardo C.-J. Dismal dating: A student’s guide to romance using the

economic way of thinking. Journal of Private Enterprise.

2020;35(3):93-108.

36. Arkes

HR, Blumer C. The psychology of sunk cost. Organizational Behavior and Human

Decision Processes. 35(1):124-140. doi:10.1016/0749-5978(85)90049-4

37. Kovács

K. The impact of financial and behavioural sunk costs on consumers’ choices. Journal

of Consumer Marketing. 2024;41(2):213-225. doi:10.1108/JCM-06-2023-6099

38. Davis

LW, Hausman C. Who Will Pay for Legacy Utility Costs? Journal of the

Association of Environmental and Resource Economists. 2022;9(6):1047-1085. http://dx.doi.org/10.1086/719793

39. Jhang

J, Lee DC, Park J, Lee J, Kim J. The impact of childhood environments on the

sunk-cost fallacy. Psychology & Marketing. 2023;40(3):531-541.

doi:10.1002/mar.21750

40. Rekar

P, Pahor M, Perat M. Effect of emotion regulation difficulties on financial

decision-making. Journal of Neuroscience, Psychology, and Economics.

2023;16(2):80-93. doi:10.1037/npe0000172

41. Tamada Y, Tsai T-S. Delegating the decision-making authority to

terminate a sequential project. Journal of Economic Behavior and

Organization. 2014;99:178-194. doi:10.1016/j.jebo.2014.01.007

42. Rogers

EM. Diffusion of Innovations. 5th ed. Free Press; 2003.

43. Catalogue

of Bias Collaboration. Persaud N, Heneghan C. Novelty Bias. In: Catalogue

Of Bias: https://catalogofbias.org/biases/novelty-bias/. Accessed 7 October

2024.

44. Liang

Z, Mao J, Li G. Bias against scientific novelty: A prepublication perspective. Journal

of the Association for Information Science and Technology.

2023;74(1):99-114. doi:10.1002/asi.24725

45. Langerock

H. Professionalism: a study in professional deformation. American Journal of

Sociology 1915 Jul; 21 (1): 30-44. https://www.jstor.org/stable/2763633

46. Levin

S, Federico CM, Sidanius J, Rabinowitz JL. Social Dominance Orientation and

Intergroup Bias: The Legitimation of Favoritism for High-Status Groups. Personality

and Social Psychology Bulletin. 2002;28(2):144-157.

https://doi.org/10.1177/0146167202282002

47. Rabow

MW, Evans CN, Remen RN. Professional formation and deformation: repression of

personal values and qualities in medical education. Fam Med.

2013;45(1):13-18.

48. du

Toit D. A sociological analysis of the extent and influence of professional

socialization on the development of a nursing identity among nursing students

at two universities in Brisbane, Australia. J Adv Nurs.

1995;21(1):164-171. doi:10.1046/j.1365-2648.1995.21010164.x

49. Horowitz

A. On Looking: A Walker’s Guide to the Art of Observation. First

Scribner hardcover edition. Scribner, an imprint of Simon & Schuster, Inc.;

2013. Accessed December 5, 2024.

https://public.ebookcentral.proquest.com/choice/publicfullrecord.aspx?p=5666838

50. Edwards

D. The myth of the neutral reporter. New Statesman 2004 Aug 9: 12-13.

51. Schudson

M. Social Origins of Press Cynicism in Portraying Politics. American

Behavioral Scientist. 1999;42(6):998-1008. doi:10.1177/00027649921954714

52. Bostwick

ED, Stocks MH, Wilder WM. Professional Affiliation Bias among CPAs and

Attorneys at Publicly Traded US Firms. In: Advances in Accounting Behavioral

Research. ; 2019:121-152. doi:10.1108/S1475-148820190000022007

53. Witkowski

SA. An implicit model for the prediction of managerial effectiveness. Polish

Psychological Bulletin. Published online 1996.

54. MacLean

CL, Dror IE. Measuring base-rate bias error in workplace safety investigators. Journal

of Safety Research. 2023;84:108-116. doi:10.1016/j.jsr.2022.10.012

55. Thomas

O, Reimann O. The bias blind spot among HR employees in hiring decisions. German

Journal of Human Resource Management. 2023;37(1):5-22.

doi:10.1177/23970022221094523

56. Moskvina

NB. The Schoolteacher’s Risk of Personality and Professional Deformation. Russian

Education and Society. 2006;48(11):74-88.

57. Moradi

Z, Najlerahim A, Macrae CN, Humphreys GW. Attentional saliency and ingroup

biases: From society to the brain. Social Neuroscience.

2020;15(3):324-333. doi:10.1080/17470919.2020.1716070

58. Wann

DL, Grieve FG. Biased Evaluations of In-Group and Out-Group Spectator Behavior

at Sporting Events: The Importance of Team Identification and Threats to Social

Identity. The Journal of Social Psychology. 2005;145(5):531-545.

doi:10.3200/SOCP.145.5.531-546

59. Armenta

BM, Scheibe S, Stroebe K, Postmes T, Van Yperen NW. Dynamic, not stable: Daily

variations in subjective age bias and age group identification predict daily

well-being in older workers. Psychology and Aging. 2018;33(4):559-571.

doi:10.1037/pag0000263

60. Van

Bavel JJ, Pereira A. The partisan brain: An identity-based model of political

belief. Trends in Cognitive Sciences. 2018;22(3):213-224.

doi:10.1016/j.tics.2018.01.004

61. Weimer

DL. Institutionalizing Neutrally Competent Policy Analysis: Resources for

Promoting Objectivity and Balance in Consolidating Democracies. Policy

Studies Journal. 2005;33(2):131-146. doi:10.1111/j.1541-0072.2005.00098.x

62. Gaertner

SL, Dovidio JF, Rust MC, et al. Reducing intergroup bias: Elements of

intergroup cooperation. Journal of Personality and Social Psychology.

1999;76(3):388-402. doi:10.1037/0022-3514.76.3.388

63. Dovidio

JF, Love A, Schellhaas FMH, Hewstone M. Reducing intergroup bias through

intergroup contact: Twenty years of progress and future directions. Group

Processes & Intergroup Relations. 2017;20(5):606-620.

doi:10.1177/1368430217712052

64. Ensari

N, Miller N. The out-group must not be so bad after all: The effects of

disclosure, typicality, and salience on intergroup bias. Journal of

Personality and Social Psychology. 2002;83(2):313-329.

doi:10.1037/0022-3514.83.2.313

65. Crisp

RJ, Hewstone M, Rubin M. Does multiple categorization reduce intergroup bias? Personality

and Social Psychology Bulletin. 2001;27(1):76-89.

doi:10.1177/0146167201271007

66. Prati

F, Crisp RJ, Rubini M. 40 years of multiple social categorization: A tool for

social inclusivity. European Review of Social Psychology.

2021;32(1):47-87. doi:10.1080/10463283.2020.1830612

67. Hewstone

M, Rubin M, Willis H. Intergroup bias. Annual Review of Psychology.

2002;53(1):575-604. doi:10.1146/annurev.psych.53.100901.135109

68. Liebkind

K, Haaramo J, Jasinskaja-Lahti I. Effects of contact and personality on

intergroup attitudes of different professionals. Journal of Community &

Applied Social Psychology. 2000;10(3):171-181.

doi:10.1002/1099-1298(200005/06)10:3<171::AID-CASP557>3.0.CO;2-I

69. Kahan

DM, Landrum A, Carpenter K, Helft L, Jamieson KH. Science curiosity and

political information processing. Political Psychology. 2017;38(Suppl

1):179-199. doi:10.1111/pops.12396

70. Motta

M, Chapman D, Haglin K, Kahan D. Reducing the administrative demands of the

Science Curiosity Scale: A validation study. International Journal of Public

Opinion Research. 2021;33(2):215-234. doi:10.1093/ijpor/edz049

71. Aghion

P, Tirole J. Formal and Real Authority in Organizations. Journal of

Political Economy. 1997;105(1):1-29. https://doi.org/10.1086/262063

72. Ross

L, Nisbett RE. The Person and the Situation: Perspectives of Social

Psychology. Temple University Press; 1991: 52-8.

73. Milgram

S. Obedience to Authority an Experimental View. Harper & Row; 1974.

74. Hock

RR. Forty Studies That Changed Psychology: Explorations into the History

Psychological Research. 7th ed. Pearson; 2013: 306-15.

75. Blass

T. The Milgram Paradigm After 35 Years: Some Things We Now Know About Obedience

to Authority¹. Journal of Applied Social Psychology. 1999;29(5):955-978.

doi:10.1111/j.1559-1816.1999.tb00134.x

76. Albright

MK, Woodward W. Fascism: A Warning. First edition. Harper, an imprint of

HarperCollins Publishers; 2018.

77. Shi

R, Guo C, Gu X. Authority updating: An expert authority evaluation algorithm

considering post-evaluation and power indices in social networks. Expert

Systems. 2021;38(1). doi:10.1111/exsy.12605

78. Tarnow

E. Towards the Zero Accident Goal: Assisting the First Officer: Monitor and

Challenge Captain Errors. Journal of Aviation/Aerospace Education &

Research. 2000;10(1):8. DOI: https://doi.org/10.15394/jaaer.2000.1269

79. Austin

JP, Halvorson SAC. Reducing the Expert Halo Effect on Pharmacy and Therapeutics

Committees. JAMA. 2019;321(5):453-454. doi:10.1001/jama.2018.20789

80. Hey

JD. The Economics of Optimism and Pessimism: A D. Kyklos.

1984;37(2):181-205. doi:10.1111/j.1467-6435.1984.tb00748.x (p. 183).

81. Shadish

WR, Cook TD, Campbell DT. Experimental and Quasi-Experimental Designs for

Generalized Causal Inference. Houghton Mifflin; 2002: 56; 179;

82. Sullivan

MJL. The Pain Catastrophizing Scale: Development and Validation. Psychological

Assessment. 1995;7(4):524-532. DOI:10.1037/1040-3590.7.4.524

83. Garnefski

N, Kraaij V. Cognitive emotion regulation questionnaire - development of a

short 18-item version (CERQ-short). Personality and Individual Differences.

2006; 41(6):1045-1053. doi:10.1016/j.paid.2006.04.010

84. Zhan

L, Lin L, Wang X, Sun X, Huang Z, Zhang L. The moderating role of

catastrophizing in day‐to‐day dynamic stress and

depressive symptoms. Stress and Health: Journal of the International Society

for the Investigation of Stress. 2024;40(4). doi:10.1002/smi.3404

85. Kellermann

K. The negativity effect and its implications for initial interaction. Communication

Monographs. 1984;51(1):37-55. (p. 37). doi:10.1080/03637758409390182

86. Yang

L, Unnava HR. Ambivalence, Selective Exposure, and Negativity Effect. Psychology

and Marketing. 2016;33(5):331-343. doi:10.1002/mar.20878

87. Klein

JG. Negativity Effects in Impression Formation: A Test in the Political Arena. Personality

and Social Psychology Bulletin. 1991;17(4):412-418.

doi:10.1177/0146167291174009

88. Klein

JG, Ahluwalia R. Negativity in the Evaluation of Political Candidates. Journal

of Marketing. 2005;69(1):131-142. https://doi.org/10.1509/jmkg.69.1.131.5550

89. Fracalanza K, Raila H, Rodriguez CI. Could written imaginal

exposure be helpful for hoarding disorder? A case series. Journal of

Obsessive-Compulsive and Related Disorders. 2021; 29: 1-5.

doi:10.1016/j.jocrd.2021.100637

90. Goodwin

P, Gönül S, Önkal D, Kocabıyıkoğlu A, Göğüş CI. Contrast effects

in judgmental forecasting when assessing the implications of worst and best

case scenarios. Journal of Behavioral Decision Making.

2019;32(5):536-549. doi:10.1002/bdm.2130

91. Wood

S, Kisley MA. The negativity bias is eliminated in older adults: age-related

reduction in event-related brain potentials associated with evaluative

categorization. Psychology and aging. 2006;21(4):815-820. DOI:

10.1037/0882-7974.21.4.815

92. Sonoda

A. Optimistic bias and pessimistic realism in judgments of contingency with

aversive or rewarding outcomes. Psychological reports.

2002;91(2):445-456.

93. 9Singh

R, Brinster KN. Fighting Fake News: The Cognitive Factors Impeding Political

Information Literacy. In: Libraries and the Global Retreat of Democracy:

Confronting Polarization, Misinformation, and Suppression. ; 2021:109-131.

doi:10.1108/S0065-283020210000050005

94. Janis

IL. Groupthink and group dynamics: a social psychological analysis of defective

policy decisions. Policy Studies Journal. 1973 Sep;2(1):19-25.

doi:10.1111/j.1541-0072.1973.tb00117.x

95. Schafer

M, Crichlow S. Antecedents of Groupthink: A Quantitative Study. Journal of

Conflict Resolution. 1996;40(3):415-435. doi:10.1177/0022002796040003002

96. Hogg

MA, Hains SC. Friendship and group identification: a new look at the role of

cohesiveness in groupthink. European Journal of Social Psychology.

1998;28(3):323-341.

doi:10.1002/(SICI)1099-0992(199805/06)28:3<323::AID-EJSP854>3.0.CO;2-Y

97. Kerr

NL, Tindale RS. Group performance and decision making. Annual review of

psychology. 2004;55:623-655.

https://doi.org/10.1146/annurev.psych.55.090902.142009

98. Janis

IL. Groupthink: psychological studies of policy decisions and fiascoes. Boston:

Houghton Mifflin Company, 1983: 260-76.

99. Hodson

G, Sorrentino RM. Groupthink and uncertainty orientation: Personality

differences in reactivity to the group situation. Group Dynamics: Theory,

Research, and Practice. 1997;1(2):144-155. doi:10.1037/1089-2699.1.2.144

100.

Lee CE, Martin J. Obama warns against White House ‘groupthink.’ Politico

December 1,2008. Available from: <

<https://www.politico.com/story/2008/12/obama-warns-against-wh-groupthink-016076>.

Accessed 8 December 2024.

101.

De Villiers R, Hankin R, Woodside AG. Making decisions well and badly:

how stakeholders’ discussions influence executives’ decision confidence and

competence. In: Woodside AG. Making Tough Decisions Well and Badly: Framing,

Deciding, Implementing, Assessing. Emerald; 2016. Accessed December 6, 2024.

http://www.dawsonera.com/depp/reader/protected/external/AbstractView/S9781786351197

102.

Korteling JEH, Gerritsma JYJ, Toet A. Retention and Transfer of

Cognitive Bias Mitigation Interventions: A Systematic Literature Study.

Frontiers in psychology. 2021;12:629354. doi:10.3389/fpsyg.2021.629354

103.

Shlonsky A, Featherston R, Galvin KL, et al. Interventions to Mitigate

Cognitive Biases in the Decision Making of Eye Care Professionals: A Systematic

Review. Optom Vis Sci. 2019;96(11):818-824. doi:10.1097/OPX.0000000000001445

104.

Etzioni A. Humble Decision-Making Theory. Public Management Review.

2014;16(5):611-619. [Page 612]. doi:10.1080/14719037.2013.875392

105.

Flannelly LT, Flannelly KJ. Reducing People’s Judgment Bias About Their

Level of Knowledge. The Psychological record. 2000;50(3):587. DOI:

https://doi.org/10.1007/BF03395373

106.

Hoch SJ. Counterfactual reasoning and accuracy in predicting personal

events. Journal of Experimental Psychology: Learning, Memory, and Cognition.

1985;11(4):719-731. doi:10.1037//0278-7393.11.1-4.719

107.

Hirt ER, Markman KD. Multiple explanation: A consider-an-alternative

strategy for debiasing judgments. Journal of Personality and Social Psychology.

1995;69(6):1069-1086. doi:10.1037//0022-3514.69.6.1069

108.

Isler O, Yilmaz O, Dogruyol B. Activating reflective thinking with

decision justification and debiasing training. Judgment and Decision Making.

2020;15(6):926-938. doi:10.1017/S1930297500008147

109.

Cavojová V, Šrol J, Jurkovic M. Why Should We Try to Think Like

Scientists? Scientific Reasoning and Susceptibility to Epistemically Suspect

Beliefs and Cognitive Biases. Applied Cognitive Psychology. 2020;34(1):85-95.

110.

Fong GT. The Effects of Statistical Training on Thinking about Everyday

Problems. Cognitive Psychology. 1986;18(3):253-292

111.

Falk E. The science of making better decisions. New York Times. 2025

July 6; Opinion: 10.

Supplemental Files

Supplemental Files

Appendix A

Note:

This appendix document is the JMLA supplemental file version of the

online appendix referred to in text (see Appendix headline for more

information).

Authors’ Affiliations

Jonathan D. Eldredge, MLS,

PhD, jeldredge@salud.unm.edu, https://orcid.org/0000-0003-3132-9450,

Professor, Health Sciences Library and Informatics Center. Professor, Department

of Family & Community Medicine, School of Medicine. Professor, College of

Population Health University of New Mexico, Albuquerque, NM

Deirdre A. Hill, PhD, DAHill@salud.unm.edu, https://orcid.org/0000-0002-8495-659X,

Associate Professor, School of Medicine, University of New Mexico, Albuquerque,

NM