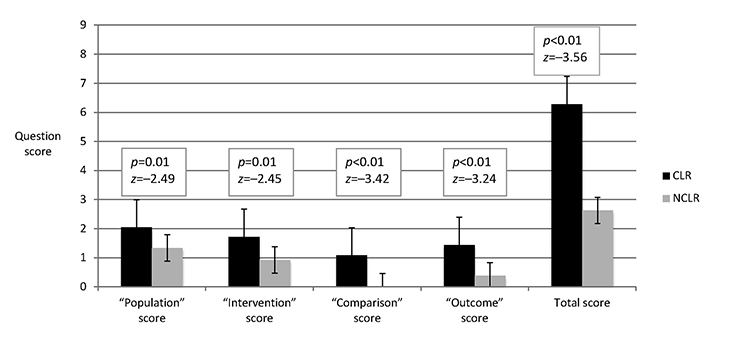

Figure 1 Population, intervention, comparison, outcome (PICO) question quality scores

Riley Brian, Nicola Orlov, Debra Werner, Shannon K. Martin, Vineet M. Arora, Maria Alkureishi

doi: http://dx.doi.org/10.5195/jmla.2018.254

Received June 2017: Accepted October 2017

ABSTRACT

Objective

The investigation sought to determine the effects of a clinical librarian (CL) on inpatient team clinical questioning quality and quantity, learner self-reported literature searching skills, and use of evidence-based medicine (EBM).

Methods

Clinical questioning was observed over 50 days of inpatient pediatric and internal medicine attending rounds. A CL was present for 25 days and absent for 25 days. Questioning was compared between groups. Question quality was assessed by a blinded evaluator, who used a rubric adapted from the Fresno Test of Competence in Evidence-Based Medicine. Team members were surveyed to assess perceived impacts of the CL on rounds.

Results

Rounds with a CL (CLR) were associated with significantly increased median number of questions asked (5 questions CLR vs. 3 NCLR; p<0.01) and answered (3 CLR vs. 2 NCLR; p<0.01) compared to rounds without a CL (NCLR). CLR were also associated with increased mean time spent asking (1.39 minutes CLR vs. 0.52 NCLR; p<0.01) and answering (2.15 minutes CLR vs. 1.05 NCLR; p=0.02) questions. Rounding time per patient was not significantly different between CLR and NCLR. Questions during CLR were 2 times higher in adapted Fresno Test quality than during NCLR (p<0.01). Select participants described how the CL’s presence improved their EBM skills and care decisions.

Conclusions

Inpatient CLR were associated with more and improved clinical questioning and subjectively perceived to improve clinicians’ EBM skills. CLs may directly affect patient care; further study is required to assess this. CLs on inpatient rounds may be an effective means for clinicians to learn and use EBM skills.

The increasing volume of complex medical literature and concurrent time constraints have hindered clinicians’ abilities to search for information and apply evidence-based medicine (EBM) skills to clinical practice [1–4]. In fact, studies have shown that clinicians do not pursue or find answers for about half of their clinical questions that arise during everyday practice [5–8]. The greatest barrier to answering clinical questions, insufficient time, has also contributed to physician burnout and poor patient outcomes [9, 10]. Indeed, time pressure may detract from physicians’ satisfaction and can stress their relationships with patients [10, 11].

Clinical librarians (CLs), also known as medical or hospital librarians, have sought to address this problem and have been increasingly involved in clinical practice since the 1970s [12]. Since that time, a growing focus on lifelong learning by the American Association of Medical Colleges (AAMC) and the Accreditation Council for Graduate Medical Education (ACGME) has kindled discussion about the potential role of CLs in medical education [12–14]. When they are involved in clinical practice and medical education, CLs can have positive effects in multiple capacities by planning curricula, facilitating journal clubs, and training clinicians in the use of information services [2, 15–21]. Of note, some CLs act as embedded librarians, saving physicians time by performing literature searches and assisting in answering clinical questions that arise while rounding during real-time patient care [15, 22, 23]. A recent study suggests that deploying CLs into clinical settings in this manner could encourage physicians to answer clinical questions, become lifelong learners, and ensure patient safety [9].

Five systematic reviews have been published assessing the role of CLs in hospital systems and during rounds [15, 16, 22–24]. These reviews have concluded that CLs provide useful information to clinicians to inform their decisions. Indeed, several studies described in these reviews and elsewhere have used objective data to suggest that CLs can positively affect patients and the health care system by shortening hospital stays and reducing costs [25–28]. Other studies, described in these reviews and elsewhere, propose that CLs positively affect clinicians by adding to the culture of learning and clinical teams’ question formulation, improving clinicians’ skill in searching the literature, and facilitating the implementation of evidence-based practices [2, 28–32]. Only one existing study has objectively investigated how the presence of a CL affects clinical questioning on rounds, and it found that the presence of a CL was associated with increased trainee identification of clinical questions on rounds [33]. Other studies have been limited to self-reported surveys, and no study has investigated how the presence of a CL affects certain aspects of clinical question practices on rounds. As such, direct observation may supplement previous studies to provide further depiction of the effect of CLs on inpatient rounds with respect to clinicians’ learning and question formulation.

In this study, the authors asked whether the presence of a CL was associated with changes in inpatient teams’ clinical questioning, as measured by the number of questions asked and answered, the time spent asking and answering questions, and the quality of questions asked during rounds. Furthermore, we explored changes in inpatient team members’ self-reported ability, comfort, and confidence in searching the literature and applying evidence-based practices.

University of Chicago Medicine is a 568-bed academic medical center on the South Side of Chicago. Two inpatient services were selected for participation in the study. The first service is an internal medicine inpatient team consisting of 1 to 3 medical students, 1 intern, 3 senior residents, and a hospitalist or general medicine attending physician. This service carries a maximum of 12 patients and focuses on transitioning patients from the hospital through to an outpatient setting. The second service is a general pediatric inpatient team consisting of 3 to 4 medical students, 3 interns, 2 senior residents, and a pediatric hospital medicine attending. This service usually carries fewer than 15 patients but has no upper patient limit. Both services admit patients daily.

Both an internal medicine team and a pediatric team were chosen to increase the generalizability of findings. Prior to the start of this study, the CL rounded once weekly on the internal medicine team for one year but did not round on the pediatric team. The CL had been available to any student, trainee, or staff member at the University of Chicago for consultation by phone or email, although utilization of the service was low. The CL gives an annual presentation about library services to all starting interns in their new hospital orientation.

A direct observation instrument (supplemental Appendix A) was developed and used during rounds to record the name of the team, the presence or absence of the CL, the number of patients seen, the total time spent rounding, the total number of clinical questions asked and answered, the time spent asking and answering clinical questions, and the content of clinical questions and nonclinical team questions about EBM resources. A clinical question was defined as a question to which the asker did not know the answer and to which a search of the medical literature might reasonably be expected to produce an answer. For example, “What are this patient’s lab results?” was not considered a clinical question, whereas “If MRSA [methicillin-resistant Staphylococcus aureus] is cultured in the urine of an elderly female, what further diagnostic tests or evaluations are indicated?” was considered a clinical question. The time spent asking and answering clinical questions was limited to the number of minutes spent verbalizing questions and the answers to those questions and did not include time spent looking up questions.

At the beginning of a rotation, medical students, interns, residents, and attendings were informed by a medical student observer, who had been trained by two senior researchers, that they could request a literature search by the CL in person, by email, or through an online submission form in Research Electronic Data Capture (REDCap) [34] hosted at the University of Chicago. The REDCap form asked participants to select their departments and levels of training before describing their questions (supplemental Appendix B). Participants were informed that questions submitted through REDCap would be forwarded to the CL, who would research the questions and send answers to their services’ attendings to discuss with the team within one to three days.

Participants were also given and instructed about a card outlining the basics of asking a question in the population, intervention, comparison, outcome (PICO) format [35] along with a link to library resources and the online search request form (supplemental Appendix C). The PICO information card was distributed and explained by the medical student observer at the beginning of every participant’s rotation regardless of the presence or absence of the CL.

Research of clinical questions during rounds involved the CL’s use of a tablet loaded with several medical applications and resources including UpToDate, PubMed, Micromedex, DynaMed, AccessMedicine, and Lab Tests Online. As the team discussed a patient’s care, members of the team asked the CL clinical questions, or the CL offered to research questions that arose. The CL was present before and after rounds to talk with team members regarding questions but did not interrupt presentations during rounds or provide information regarding a search, except between patient rooms. The CL occasionally solicited additional information to be able to find the most relevant results. Relevant information was summarized and explained by the CL to the team in real time. Articles and more thorough responses were emailed to the asker or the asker’s attending after rounds.

Rounding data were collected for twenty-five days when the CL was not present on rounds (“NCLR”) and for twenty-five days when the CL was present on rounds (“CLR”) (supplemental Appendix D). The medical student observer attended all fifty days of both NCLR and CLR. Since medical students, interns, residents, and attendings had different switch schedules onto and off of the teams, the observation schedule was designed to provide all groups with both CLR and NCLR.

All clinical questions were transcribed as stated during rounds each day for quality assessment. The most completely formed clinical question, based on PICO components, was selected by the observer at the end of rounds each day for quality assessment. Questions with more individual PICO components or with more descriptors within a given component were selected. If two questions appeared to contain the same number of PICO components and descriptors, the longer question was sent for quality evaluation. Question quality was evaluated by a blinded librarian, who was unaffiliated with the study, using criteria adapted from the rubric for the Fresno Test of Competence in Evidence-Based Medicine (supplemental Appendix E) [36], which is a reliable tool for assessing learners’ performance in clinical scenarios requiring evidence-based approaches. The rubric accompanying the Fresno Test assesses whether example clinical questions contain key PICO components and thus represent well-developed EBM questions. The rubric from the Fresno Test was validated through distribution to teachers of EBM and revised based on expert suggestions.

A 12-item post-rotation survey was developed based on review of published literature (supplemental Appendix F). Attendance was taken at the start of each day of rounds. Medical students, interns, residents, and attending participants who were present for at least one day of CLR and one day of NCLR were provided with the post-rotation survey in paper format immediately following rounds on their final days of inpatient service. Participants who were not available during that time were sent an email with an identical online REDCap survey for them to complete. Participants were asked to reflect using a Likert scale (1=very low; 5=very high) on self-perceived (1) ability to formulate a question about patient care in the PICO format, (2) comfort in conducting an online medical literature search, and (3) confidence in finding articles to answer their PICO questions before and after their rotations. Respondents were further asked about their clinical questioning during the rotation, the usefulness of having the CL on rounds, and the effect of clinical questioning on patient care. No incentive was provided for survey completion, and study involvement was not tied to learner evaluation in any way.

Descriptive statistics were used to summarize variables of interest. Analysis assessed differences in clinical questioning between CLR and NCLR. Wilcoxon rank-sum tests were used for ordinal data to compare the number of questions asked and answered between CLR and NCLR and to compare the quality of questions in each PICO category and in total quality score between CLR and NCLR after grading from a blinded evaluator. Independent-sample t-tests were used for continuous data to compare the time spent asking or answering questions and the time spent rounding per patient between CLR and NCLR. Multivariable regression models were used to test whether the presence of the CL, department, time spent rounding per patient, academic year of residency, or participant training level were associated with question quantity or question quality. Wilcoxon signed-rank paired tests were used for paired post-rotation survey data to determine whether survey respondents’ ability, comfort, and confidence changed before and after their exposure to CL rounds. Chi-squared tests were used for categorical data to determine if survey response rates differed based on department or level of training. All analyses were performed in Stata 14.0, and statistical significance was defined as p<0.05 [37].

This project was exempted by the University of Chicago’s Institutional Review Board (IRB16-0629).

Data were collected from rounds for a total of 50 days over the 10-week study period. Observations were split evenly between CLR (n=25) and NCLR (n=25) and between pediatrics (n=25) and internal medicine (n=25). The number of questions asked and answered and the time spent asking and answering clinical questions were significantly greater for CLR than for NCLR (Table 1). On 4 occasions, the CL answered questions from a previous day. In multivariable regression models controlling for department, time spent rounding per patient, and academic year of residency, our results remained unchanged.

Table 1 Differences in number of questions and time spent asking and answering clinical questions between clinical librarian present on rounds and clinical librarian not present on rounds

| Clinical librarian present (n=25) | Clinical librarian not present (n=25) | Test statistic | p-value | |||

| Number of questions asked* | 5 | (2–9) | 3 | (0–9) |

–2.76 | <0.01‡ |

| Number of questions answered* | 3 | (1–9) | 2 | (0–7) |

–3.35 | <0.01‡ |

| Time asking questions (min)† | 1.39 | (1.11) | 0.52 | (0.49) | –3.58 | <0.01§ |

| Time answering questions (min)† | 2.15 | (1.90) | 1.05 | (1.19) | –2.46 | 0.02§ |

| Time rounding per patient (min)† | 11.82 | (4.30) | 11.71 | (4.33) | –0.09 | 0.93§ |

* Median value with range in parentheses.

† Mean value with standard deviation in parentheses.

‡ Wilcoxon rank-sum test.

§ Independent-sample t-test.

No questions were submitted through the online submission form. Five of the total 203 questions (2.5%) recorded during the study period were submitted by email to the CL. All questions posed to the CL were addressed on rounds or with a follow-up email to the asker or the asker’s attending.

The most complete clinical question was rated for quality from each day of rounds (n=49). There were no clinical questions asked during 1 day of rounds; therefore, no clinical question was recorded for that day. Questions from CLR were significantly more likely to contain each of the 4 components of PICO questions than questions from NCLR (Figure 1). Overall, on a scale from 0 to 12, participants scored a median of 6 (range of 1 to 12) with a mean of 6.28 (standard error of 0.71) on CLR and a median of 3 (range of 0 to 5) with a mean of 2.63 (standard error of 0.27) on NCLR. In multivariable regression models controlling for department, time spent rounding per patient, participant training level, and academic year of residency, our results remained unchanged.

|

|

||

|

Figure 1 Population, intervention, comparison, outcome (PICO) question quality scores |

||

One clinical question from rounds each day (n=49) was scored using a rubric adapted from the Fresno Test by a blinded evaluator. Each question received a score from 0 to 3 in 4 categories corresponding to the elements of well-formed PICO questions. The total score was out of a maximum of 12 points.

Fifty surveys were distributed, and 45 surveys were completed, representing a 90% response rate. In pediatrics, 28 surveys were distributed and 27 were completed (96%); while in internal medicine, 22 surveys were distributed and 18 were completed (82%). All 20 medical students completed the survey, 10 of 11 interns completed the survey (91%), 11 of 14 residents completed the survey (79%), and 4 of 5 attendings completed the survey (80%). There was no significant difference in response rate based on department (χ2=0.29; p=0.59) or level of training (χ2=0.48; p=0.92).

On a Likert scale from 1 to 5, participants reported an ability to formulate a clinical question with a median of 3 prior to their rotations and a median of 4 at the end of their rotations (z=−4.73; p<0.01). Similarly, participants reported comfort conducting a literature search with a median of 3 prior to their rotations and a median of 4 at the end of their rotations (z=−2.90; p<0.01). Participants reported confidence in finding an article to answer a clinical question with a median of 3 prior to their rotations and a median of 3 at the end of their rotations. However, the distribution of scores changed significantly, with 12 participants (27%) reporting a confidence level of at least 4 with respect to their pre-rotation ability and 22 participants (49%) reporting a confidence level of at least 4 with respect to their post-rotation ability (z=−3.60; p<0.01).

Participants were also asked to reflect on questions regarding their rotation experience with EBM. Thirty-three of 40 responses (83%) indicated that the CL had added to their learning, and 28 of 38 responses (74%) indicated that the CL had increased the relevance of questions they asked. Notably, several of these participants described the impact of the CL in this regard, with one writing, “it helped to get answers faster and get high quality data,” and another stating, “we learned about finding the most updated article on a topic, how to filter PubMed for reviews, and discussed how to keep up to date with reading journals.”

A third question asked how clinical questioning had changed patient care. Thirteen of 39 responses (33%) indicated that clinical questioning had changed patient care decisions. For example, a participant explained that care was changed when the CL’s research prompted them to use “Lasix [furosemide] instead of HCTZ [hydrochlorothiazide] in [a] patient with hyponatremia.” Another respondent wrote that information from the CL helped “narrow-in on a diagnosis for a patient with a complication of peritoneal dialysis.” A third participant described that research by the CL had helped them decide “whether to bridge a patient with heparin.”

During CLR, there were fifteen additional nonclinical general questions directed to the CL about how to find EBM resources. These included questions about assessing articles’ sources, using PubMed effectively, and employing different search strategies for distinct types of clinical questions. These questions were not included as clinical questions in the above analysis. There were no general nonclinical questions about finding EBM resources during NCLR.

We found that the presence of a CL on inpatient rounds was associated with increased quantity and quality of clinical questioning and was subjectively perceived as improving participants’ EBM skills and changing patient care decisions. While similar findings have been reported [15, 22, 23, 33], this study is novel in that it includes an evaluation of previously unexplored aspects of clinical questioning via direct observation of a CL’s effects on rounds. In addition, survey responses suggest that involvement of CLs in rounds can improve EBM skills and care management decisions. This supports findings reported by Marshall and colleagues, who previously described survey findings showing CLs to be perceived as valuable to clinical learning and decisions at an even higher rate than reported in this study [21].

These results are significant in that they provide evidence that the physical presence of a CL during rounds can prompt clinicians to verbalize and pursue questions, spend significantly more time discussing and answering questions, and learn about resources for EBM without significantly increasing the amount of time spent on rounds. Furthermore, the presence of a CL on rounds may be associated with changes in patient care management that are based on evidence from literature searches during rounds.

Balancing time demands with the need to practice EBM and remain up-to-date with the medical literature is a significant challenge for practicing physicians today. These stressors may contribute to physician burnout, which has been shown to have negative effects on patient care, including major medical and medication errors and suboptimal care practices [38–40]. Embedding CLs into inpatient rounds can educate providers about search strategies and provide answers to clinical questions in real-time, thus helping to reduce physicians’ daily workload and enabling them to practice high-quality evidence-based care in a more meaningful and satisfying manner.

Earlier work has reported on the obstacles preventing clinicians from answering their clinical questions [3]. This study corroborates previous findings of obstacles, given that more questions were vocalized and more answers were provided during CLR. While a consult information service may also be effective [26], the relative disuse of the online question submission form and email for information consultations in this study suggests that a consult service would not affect clinicians’ EBM practices as significantly as CLs joining rounds. Rather, this suggests that the physical presence of a CL on rounds is a more effective means of providing real-time answers to clinical questions that arise on rounds, though more work needs to be done to determine if other forms of consult information services would be more effective. Previous studies have also suggested that clinical settings may be most appropriate for learning EBM skills [41]. By offering on-site, real-time information assistance on rounds, CLs likely encourage questioning and aid the development of EBM skills and practice.

The findings from this study are significant in their implications for the potential role of CLs during rounds, though there are important limitations to note. Most significantly, the observer might have introduced bias or subjectivity into the study during the gathering of rounding data or the forwarding of the most complete clinical questions to the blinded observer. Next, this was a single-institution study, and while both pediatric and internal medicine inpatient teams were included, the results might not be broadly applicable as this institution might have a culture that cannot be applied at all academic centers. Additionally, the importance of PICO formatting has been disputed, with some authors rejecting or qualifying its usefulness and its relevance to certain types of clinical questions [5, 42–44]. We used the PICO format as a way to track changes associated with the presence of a CL, as the PICO format is relatively accepted in the literature and previous work has suggested that the PICO format improves EBM skills [44–47]. Furthermore, we did not explore long-term changes in learners’ ability to search for and implement EBM and did not directly measure patient outcomes. Likewise, self-reported abilities to search for and implement EBM were assessed only in a post-rotation survey and might be subject to recall bias. Although the surveys aimed to provide a more holistic view into EBM practices, they were distributed at the end of participants’ rotations and might not accurately reflect respondents’ abilities or the CL’s impact. Also, the extra time spent asking and answering questions on CLR might have detracted from rounds in an unmeasured way. Finally, participant behavior might have been altered on CLR by the presence of an observer. While the presence of the observer during NCLR likely controlled for this effect, the true baseline questioning habits of participants is not known.

As the role of the CL evolves, institutions that already employ CLs may benefit from embedding CLs and involving them during rounds to assist with EBM searches related to patient care. While there are many other ways to teach EBM skills, this intervention does not require extra time of already busy clinicians and functions to update clinicians’ knowledge [25–28, 48]. Future work should be done to increase the generalizability of these findings and to determine whether certain services or patients would benefit most from CL services, as has been previously suggested [12]. Furthermore, future work could investigate other ways in which CLs affect clinicians, such as by further study of changes in EBM ability and assessment of long-term maintenance of change as well as changes in provider stress and burnout.

Having a CL embedded on inpatient rounds was associated with a greater quantity and quality of clinical questions on rounds and perceived improvements in EBM skills and clinical management. As the medical literature continues to expand, clinicians must frequently update their medical knowledge despite stressful time constraints. Given the results of this study and previous work, embedding and including CLs during rounds represents a solution to improve clinicians’ EBM skills by providing real-time information, thus better connecting recent literature to clinical practice.

Appendix A Direct observation instrument

Appendix B Population, intervention, comparison, outcome (PICO) question submission form

Appendix C Population, intervention, comparison, outcome (PICO) information card

Appendix D Rounding schedule

Appendix E Adapted rubric from the Fresno Test of Competence in Evidence-Based Medicine

Appendix F Post-rotation survey

We acknowledge Michelle Bass for evaluating question quality and the University of Chicago Pritzker School of Medicine Summer Research Program for funding. We also thank the fifty physicians and medical students who participated in this study.

1 Haynes RB, Hayward RS, Lomas J. Bridges between health care research evidence and clinical practice. J Am Med Inform Assoc. 1995 Dec;2(6):342.

2 Mulvaney SA, Bickman L, Giuse NB, Lambert EW, Sathe NA, Jerome RN. A randomized effectiveness trial of a clinical informatics consult service: impact on evidence-based decision-making and knowledge implementation. J Am Med Inform Assoc. 2008 Apr;15(2):203–11.

3 Ely JW, Osheroff JA, Ebell MH, Chambliss ML, Vinson DC, Stevermer JJ, Pifer EA. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study. BMJ. 2002 Mar 23;324(7339):710.

4 Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA. Getting research findings into practice: closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. BMJ. 1998 Aug 15;317(7156):465.

5 Ely JW, Osheroff JA, Maviglia SM, Rosenbaum ME. Patient-care questions that physicians are unable to answer. J Am Med Inform Assoc. 2007 Aug;14(4):407–14.

6 Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum ME. Answering physicians’ clinical questions: obstacles and potential solutions. J Am Med Inform Assoc. 2005 Mar–Apr;12(2):217–24.

7 Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, Evans ER. Analysis of questions asked by family physicians regarding patient care. West J Med. 2000 May;172(5):315–9.

8 Del Fiol G, Workman TE, Gorman PN. Clinical questions raised by clinicians at the point of care: a systematic review. JAMA Intern Med. 2014 May;174(5):710–8.

9 Brassil E, Gunn B, Shenoy AM, Blanchard R. Unanswered clinical questions: a survey of specialists and primary care providers. J Med Libr Assoc. 2017 Jan;105(1):4–11. DOI: http://dx.doi.org/10.5195/jmla.2017.101.

10 Dugdale DC, Epstein R, Pantilat SZ. Time and the patient-physician relationship. J Gen Intern Med. 1999 Jan;14(S1):S34–40.

11 Society of General Internal Medicine (SGIM) Career Satisfaction Study Group (CSSG), Linzer M, Konrad TR, Douglas J, McMurray JE, Pathman DE, Williams ES, Schwartz MD, Gerrity M, Scheckler W, Bigby J, Rhodes E. Managed care, time pressure, and physician job satisfaction: results from the physician worklife study. J Gen Intern Med. 2000 Jul;15(7):441–50.

12 Esparza JM, Shi R, McLarty J, Comegys M, Banks DE. The effect of a clinical medical librarian on in-patient care outcomes. J Med Libr Assoc. 2013 Jul;101(3):185–91. DOI: http://dx.doi.org/10.3163/1536-5050.101.3.007.

13 Accreditation Council for Graduate Medical Education. Internal medicine: program requirements and FAQs [Internet]. The Council; 2008 [cited 16 Jan 2018]. <http://www.acgme.org/Specialties/Program-Requirements-and-FAQs-and-Applications/pfcatid/2/Internal%20Medicine>.

14 Learning objectives for medical student education—guidelines for medical schools: report I of the Medical School Objectives Project. Acad Med. 1999 Jan;74(1):13–8.

15 Brettle A, Maden-Jenkins M, Anderson L, McNally R, Pratchett T, Tancock J, Thornton D, Webb A. Evaluating clinical librarian services: a systematic review. Health Inf Libr J. 2011 Mar;28(1):3–22.

16 Perrier L, Farrell A, Ayala AP, Lightfoot D, Kenny T, Aaronson E, Allee N, Brigham T, Connor E, Constantinescue T, Muellenbach J, Epstein HB, Weiss A. Effects of librarian-provided services in healthcare settings: a systematic review. J Am Med Inform Assoc. 2014 Dec;21(6):1118–24.

17 Cheng GYT. Educational workshop improved information-seeking skills, knowledge, attitudes and the search outcome of hospital clinicians: a randomised controlled trial. Health Inf Libr J. 2003 Jun;20(suppl 1):22–33.

18 Jeffery KM, Maggio L, Blanchard M. Making generic tutorials content specific: recycling evidence-based practice (EBP) tutorials for two disciplines. Med Ref Serv Q. 2009 Spring;28(1):1–9.

19 Cabell CH, Schardt C, Sanders L, Corey GR, Keitz SA. Resident utilization of information technology. J Gen Intern Med. 2001 Dec;16(12):838–44.

20 Linton AM. Emerging roles for librarians in the medical school curriculum and the impact on professional identity. Med Ref Serv Q. 2016 Oct;35(4):414–33.

21 Marshall JG, Sollenberger J, Easterby-Gannett S, Morgan LK, Klem ML, Cavanaugh SK, Oliver KB, Thompson CA, Romanosky N, Hunter S. The value of library and information services in patient care: results of a multisite study. J Med Libr Assoc. 2013 Jan;101(1):38–46. DOI: http://dx.doi.org/10.3163/1536-5050.101.1.007.

22 Wagner KC, Byrd GD. Evaluating the effectiveness of clinical medical librarian programs: a systematic review of the literature. J Med Libr Assoc. 2004 Jan;92(1):14–33.

23 Winning MA, Beverley CA. Clinical librarianship: a systematic review of the literature. Health Inf Libr J. 2003 Jun;20(suppl 1):10–21.

24 Weightman AL, Williamson J, Library & Knowledge Development Network (LKDN) Quality and Statistics Group. The value and impact of information provided through library services for patient care: a systematic review. Health Inf Libr J. 2005 Mar;22(1):4–25.

25 Klein MS, Ross FV, Adams DL, Gilbert CM. Effect of online literature searching on length of stay and patient care costs. Acad Med. 1994 Jun;69(6):489–95.

26 McGowan J, Hogg W, Zhong J, Zhao X. A cost-consequences analysis of a primary care librarian question and answering service. PLOS One. 2012;7(3):e33837.

27 Banks DE, Shi R, Timm DF, Christopher KA, Duggar DC, Comegys M, McLarty J. Decreased hospital length of stay associated with presentation of cases at morning report with librarian support. J Med Libr Assoc. 2007 Oct;95(4):381–7. DOI: http://dx.doi.org/10.3163/1536-5050.95.4.381.

28 Booth A, Sutton A, Falzon L. Evaluation of the clinical librarian project. Leicester, UK: University Hospitals of Leicester NHS Trust; 2002.

29 Grefsheim SF, Whitmore SC, Rapp BA, Rankin JA, Robison RR, Canto CC. The informationist: building evidence for an emerging health profession. J Med Libr Assoc. 2010 Apr;98(2):147–56. DOI: http://dx.doi.org/10.3163/1536-5050.98.2.007.

30 Aitken EM, Powelson SE, Reaume RD, Ghali WA. Involving clinical librarians at the point of care: results of a controlled intervention. Acad Med. 2011 Dec;86(12):1508–12.

31 Urquhart C, Turner J, Durbin J, Ryan J. Changes in information behavior in clinical teams after introduction of a clinical librarian service. J Med Libr Assoc. 2007 Jan;95(1):14–22.

32 Vaughn CJ. Evaluation of a new clinical librarian service. Med Ref Serv Q. 2009 Summer;28(2):143–53.

33 Herrmann LE, Winer JC, Kern J, Keller S, Pavuluri P. Integrating a clinical librarian to increase trainee application of evidence-based medicine on patient family-centered rounds. Acad Pediatr. 2017 Apr;17(3):339–41.

34 Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42(2):377–81.

35 Sackett D, Richardson W, Rosenberg W, Hayes R. Evidence-based medicine. how to practice and teach EBM. New York, NY: Churchill Livingstone; 1997.

36 Ramos KD, Schafer S, Tracz SM. Validation of the Fresno test of competence in evidence based medicine. BMJ. 2003 Feb 8;326(7384):319–21.

37 StataCorp. Stata statistical software: release 14. College Station, TX: StataCorp; 2015.

38 West CP, Tan AD, Habermann TM, Sloan JA, Shanafelt TD. Association of resident fatigue and distress with perceived medical errors. JAMA. 2009 Sep 23;302(12):1294.

39 West CP, Shanafelt TD, Kolars JC. Quality of life, burnout, educational debt, and medical knowledge among internal medicine residents. JAMA. 2011 Sep 7;306(9):952–60. DOI: http://dx.doi.org/10.1001/jama.2011.1247.

40 Fahrenkopf AM, Sectish TC, Barger LK, Sharek PJ, Lewin D, Chiang VW, Edwards S, Wiedermann BL, Landrigan CP. Rates of medication errors among depressed and burnt out residents: prospective cohort study. BMJ. 2008 Mar 1;336(7642):488–91.

41 Coomarasamy A, Khan KS. What is the evidence that postgraduate teaching in evidence based medicine changes anything? a systematic review. BMJ. 2004 Oct 28;329(7473):1017.

42 Cheng GYT. A study of clinical questions posed by hospital clinicians. J Med Libr Assoc. 2004 Oct;92(4):445–58.

43 Kloda LA, Bartlett JC. A characterization of clinical questions asked by rehabilitation therapists. J Med Libr Assoc. 2014 Apr;102(2):69–77. DOI: http://dx.doi.org/10.3163/1536-5050.102.2.002.

44 Huang X, Lin J, Demner-Fushman D. Evaluation of PICO as a knowledge representation for clinical questions. AMIA Annu Symp Proc. 2006:359–63.

45 Bergus GR, Randall CS, Sinift SD, Rosenthal DM. Does the structure of clinical questions affect the outcome of curbside consultations with specialty colleagues? Arch Fam Med. 2000 Jun;9(6):541–7.

46 Richardson SW, Wilson MC, Nishikawa J, Hayward RS. The well-built clinical question: a key to evidence-based decisions. ACP J Club. 1995 Nov–Dec;123(A12). (Available from: <https://acpjc.acponline.org/Content/123/3/issue/ACPJC-1995-123-3-A12.htm> [cited 16 Jan 2018].)

47 Straus SE, Richardson WS, Glasziou P, Haynes RB. Evidence-based medicine: how to practice and teach it. 4th ed. Churchill Livingstone; 2010. 312 p.

48 Ilic D, Maloney S. Methods of teaching medical trainees evidence-based medicine: a systematic review. Med Educ. 2014 Feb;48(2):124–35.

Riley Brian, rbrian@uchicago.edu, Medical Student, University of Chicago Pritzker School of Medicine, Chicago, IL

Nicola Orlov, MD, nmeyerorlov@peds.bsd.uchicago.edu, Assistant Professor of Pediatrics, Department of Academic Pediatrics, University of Chicago, Chicago, IL

Debra Werner, MLIS, dwerner@uchicago.edu, Librarian for Science Instruction and Outreach and Biomedical Reference, John Crerar Library, University of Chicago, Chicago, IL

Shannon K. Martin, MD, MS, smartin1@bsd.uchicago.edu, Assistant Professor of Medicine, Department of Medicine, University of Chicago, Chicago, IL

Vineet M. Arora, MD, MAPP, varora@medicine.bsd.uchicago.edu, Assistant Professor of Medicine, Department of Medicine, University of Chicago, Chicago, IL

Maria Alkureishi, MD, FAAP, malcocev@peds.bsd.uchicago.edu, Assistant Professor of Pediatrics, Department of Academic Pediatrics, University of Chicago, Chicago, IL

Articles in this journal are licensed under a Creative Commons Attribution 4.0 International License.

This journal is published by the University Library System of the University of Pittsburgh as part of its D-Scribe Digital Publishing Program and is cosponsored by the University of Pittsburgh Press.

Journal of the Medical Library Association, VOLUME 106, NUMBER 2, March 2018